NSX - Using VXLAN logical switches to transport vSAN and vMotion traffic

The title seems weird and at first glance we will think… NO WAY

However lets dig a bit more in what is the idea behind it?

Context

The title sounds weird and could also be misleading.

So lets clear it a bit, to be easier to understand what would be discussed in the post.

We will not be running VMware vSAN and VMware vMotion (vMotion) traffic on top of the VXLAN VTEPs VMKernel.

What we will be doing instead, is running Nested Hypervisor clusters on top of Logical switches.

The main objective here was to leverage VMware NSX (NSX) software defined solution to reduce the number of changes in the physical underlay and speed up the Nested Hypervisors cluster process.

Remember this is a Lab environment, definitely not recommend to use this in a Production Environment. Some of the configurations and tweaks neeed touch or cross the line of unsupported production solutions.

Analysis

We will go through the analysis of some key points of this post to justify some of the conclusions and why all of this works.

VXLAN

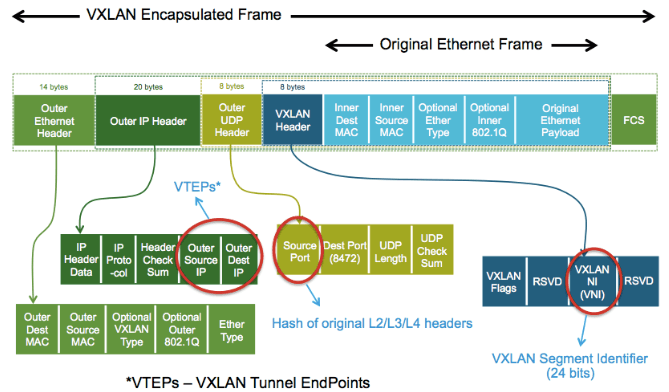

The Virtual Extensible LAN (VXLAN) is an encapsulation protocol that provides a way to extend L2 network over a L3 infrastructure by using MAC-in-UDP encapsulation and tunneling.

At this point we have the ability to present L2 networks to the Nested Hypervisors cluster as if it was a normal VLAN or L2 network.

So for now, there is no show stopper to what we are doing, since from the Nested Hypervisors cluster view there is no difference.

So for now, there is no show stopper to what we are doing, since from the Nested Hypervisors cluster view there is no difference.

We kept digging and checking what are the vSAN and vMotion requirements from the networking point of view.

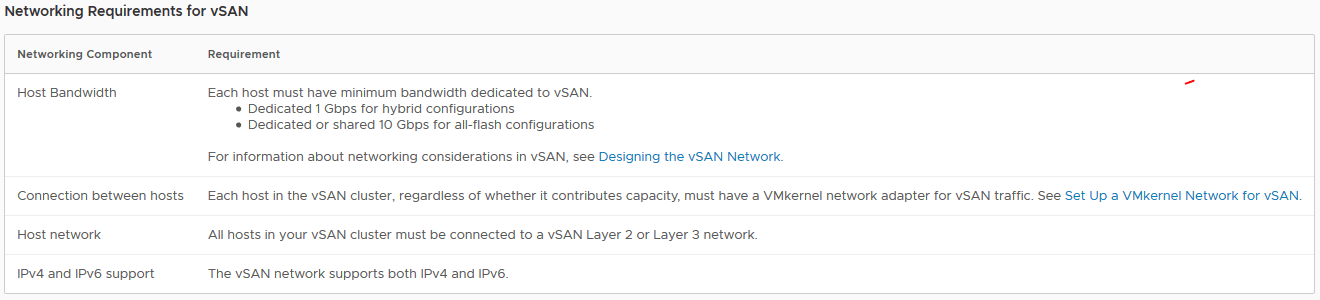

vSAN network requirements

link: VMware Docs - Networking Requirements for vSAN

- Bandwidth

- Dedicated 1Gbps for hybrid configurations

- Dedicated or shared 10Gbps for all-flash configurations

- Connection between hosts

- Each host in vSAN cluster needs a VMKernel network adapter for vSAN traffic

- Host network

- All hosts in vSAN cluster must be connected to a vSAN L2 or L3 network

- IPv4 and IPv6 support

- vSAN network supports both IPV4 or IPV6

Going through the requirements seems that a Logical Switch still fullfill all the basic requirements, so we should be ok, potentially we will have some performance hit, but it should work.

Going through the requirements seems that a Logical Switch still fullfill all the basic requirements, so we should be ok, potentially we will have some performance hit, but it should work.

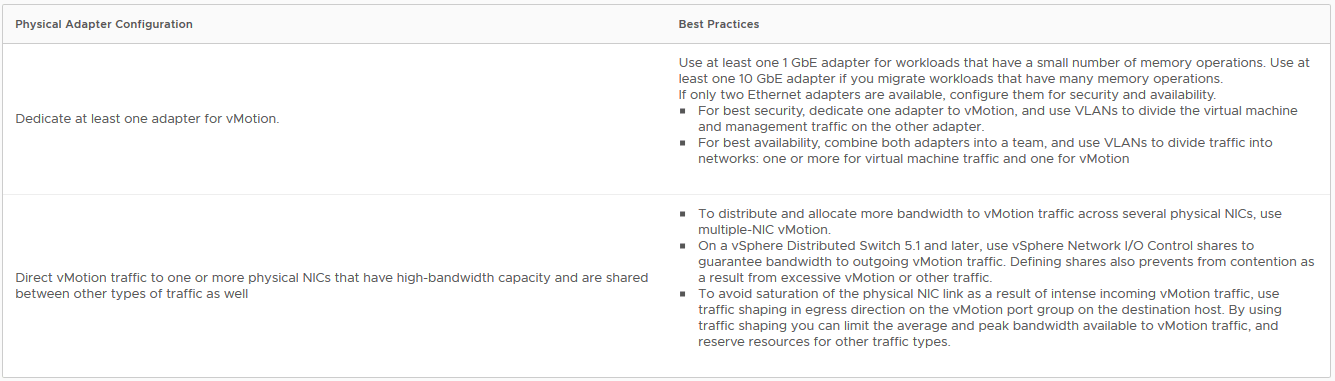

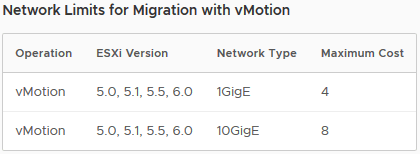

vMotion network requirements

link: VMware Docs - vSphere vMotion Networking Requirements

_link: Networking Best Practices for vSphere vMotion _

- Bandwidth

- Connection between hosts

- Each host in the cluster needs a VMKernel vMotion portgroup configured

- Host Network

- At least one network interface for vMotion traffic

- IPv4 and IPv6 support

- vMotion supports both IPv4 and IPv6

Seems that we are still ok from vMotion point of view.

Seems that we are still ok from vMotion point of view.

Conclusion

Since Logical switches seem to fullfill all the vSAN and vMotion requirements we should be ok to use them for the L2 underlay for the Nested Hypervisors cluster.

By removing the need of changes in the physical underlay will be easier to automate the Nested Environments provisioning process.

I will go through some of those automation bits in following posts.

But to summarize all the post, in theory we should not have any problems running vSAN and vMotion on top of logical switches for the Nested Hypervisors cluster.